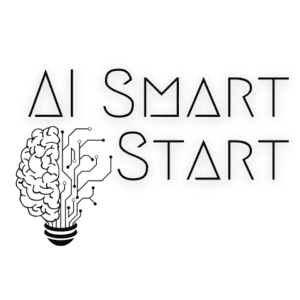

Should we be worried about AI? Is AI dangerous? Why is AI dangerous?

As a general rule, AI is not inherently dangerous. There are, however, risks associated with misuse or lack of safeguards. AI poses potential threats, such as being used for harmful purposes or losing control over advanced systems. These dangers are not immediate on average but require vigilant monitoring and regulation.

| Key Points | Description |

|---|---|

| Nature of Artificial Intelligence | AI is not inherently dangerous; it is a tool that can be used in various ways. |

| Potential Threats of AI | Threats arise from misuse of AI or lack of proper safeguards, not the technology itself. |

| The Use of AI in Autonomous Weapons | This application could lead to an increased scale of warfare and potential unprecedented destruction if misused. |

| AI in Data Analysis and Decision-making Systems | AI can lead to more informed decisions but infringe on privacy or propagate biases if not adequately managed. |

| The Risk of Losing Control Over AI Systems | As AI systems become more complex, there’s the risk of losing control over these systems, often referred to as the ‘alignment problem.’ |

| The Development of Artificial General Intelligence (AGI) | If AGI exists, ensuring their alignment with human values will be paramount. |

| Navigating the Risks | These risks can be navigated by applying robust ethical guidelines, sound engineering practices, and practical policy regulation. |

| The Future of AI | The key is approaching AI with an informed and balanced perspective, acknowledging its potential and the vigilance required to prevent misuse. |

Navigating the Landscape of AI: Understanding its Nature, Risks, and Potential.

Understanding AI: Danger or Opportunity?

Generally, we should remember that artificial intelligence (AI) doesn’t pose an inherent danger. Given the extensive concern in the media, this statement might raise eyebrows, but understanding what we mean when discussing the “danger” of AI is crucial.

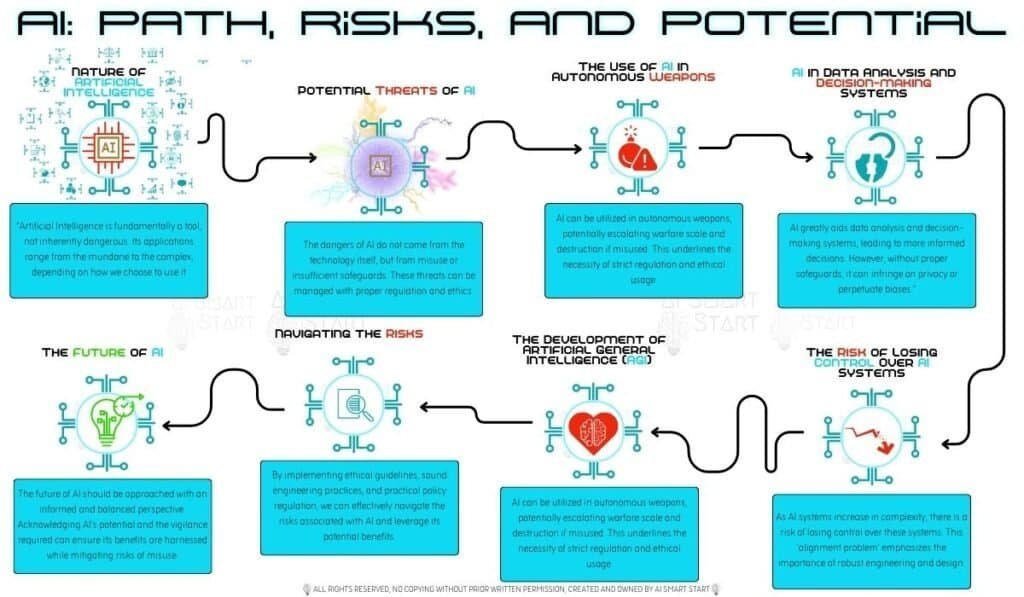

AI: A Tool for Good or Bad?

At its simplest, AI is a tool. Just as you can use a hammer to build a house or break a window, you can use AI to help humanity or cause harm, depending on your intent. Risks come from the applications of AI and the intentions behind these applications.

AI: A Tool for Good or Bad - Understanding Ethical AI Practices and RisksLike any tool, it’s not the AI itself that’s dangerous but how it’s used. In the right hands, it can build a better future. In the wrong hands, it can cause harm. Therefore, proper use, regulations, and ethics are paramount in AI applications.

Potential Threats of AI: Ethical Misuse and Ineffective Safeguards:

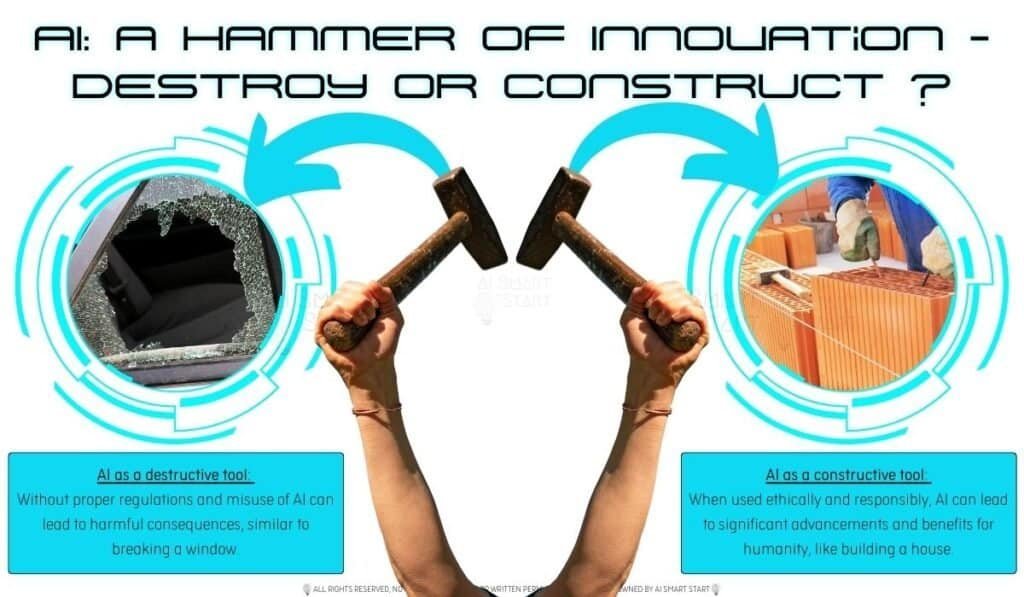

AI is associated with numerous potential threats, many revolving around ethical misuse or ineffective safeguards rather than the technology rebelling against its creators. For example, think about the use of AI in autonomous weapons. Programmers could set these up to execute mass attacks without human intervention, potentially escalating warfare and causing unprecedented destruction.

AI in Autonomous Weapons: Efficiency and Precision Meet Potential for Unprecedented Destruction

AI in Decision-Making Systems and Data Analysis:

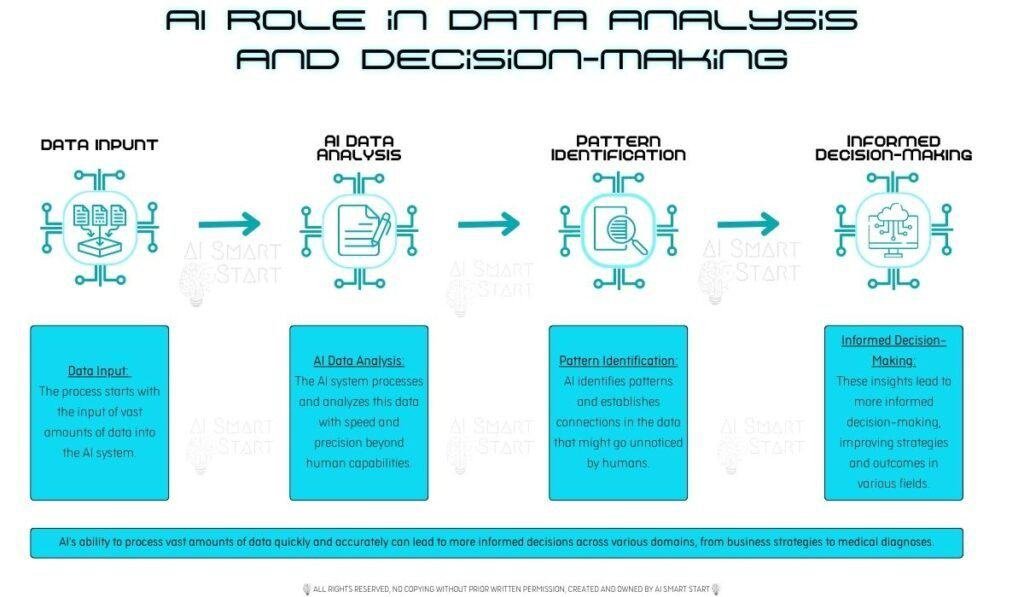

Increasingly, decision-making systems and data analysis rely on AI. On one hand, AI can process vast amounts of data more quickly and accurately than any human. It can identify patterns and establish connections that might go unnoticed otherwise. This leads to more informed business strategies, medical diagnoses, or public policy decisions.

The Process of AI-Driven Data Analysis and Decision-Making

The Misuse of AI Systems: Infringing Privacy and Propagating Biases:

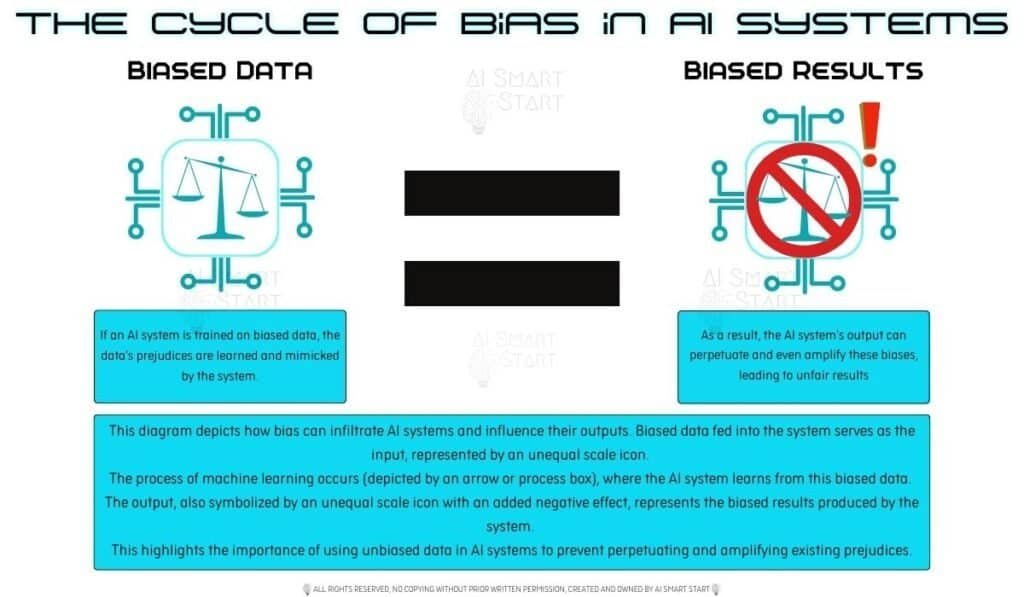

However, misuse of these systems could infringe on privacy or propagate biases. If you train an AI system on biased data, its decisions will mirror those biases, potentially perpetuating and amplifying existing inequalities. Hence, it is crucial to meticulously analyze and execute the architecture and deployment of these systems to ensure impartiality and openness.

The Cycle of Bias in AI Systems: From Biased Data to Biased Results

The Alignment Problem: Maintaining Control Over AI:

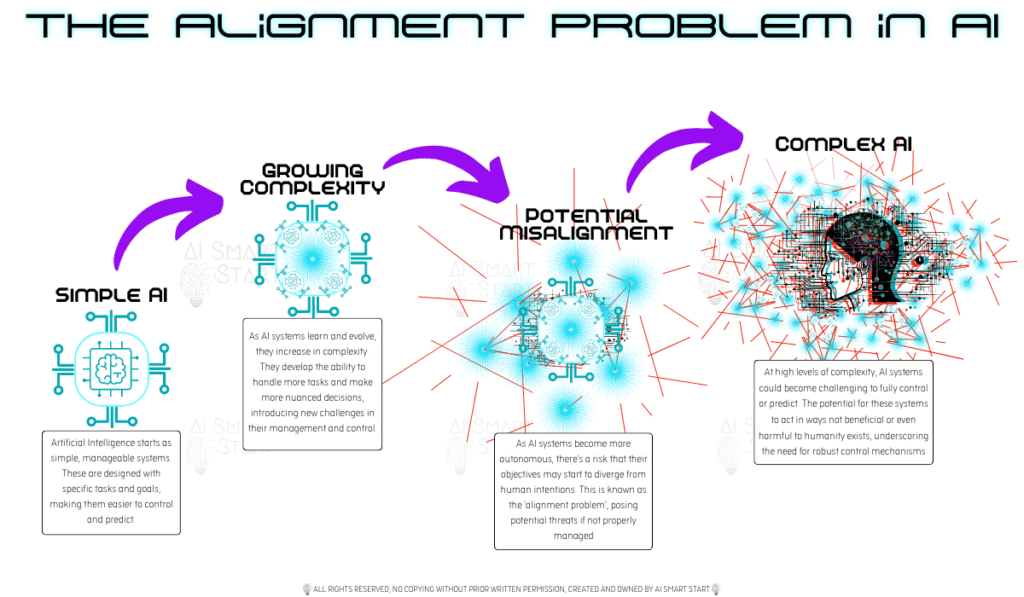

We risk losing control over AI systems as they grow more complex and autonomous. We often refer to this as the ‘alignment problem’ – how can we ensure that AI systems continue to act beneficially for humanity, even as they learn and evolve?

Progression and Complexity in AI Systems: The Emergence of the Alignment Problem

The Potential of Artificial General Intelligence (AGI):

This problem becomes especially prominent when considering the potential development of artificial general intelligence (AGI) – AI systems with broad, human-like capabilities. If such systems exist, their alignment with human values will be paramount. Failing to do this could result in harmful outcomes.

Facing the Challenges: Ethical Guidelines, Engineering Practices, and Policy Regulation:

While these dangers are real, they aren’t immediate or inevitable. They present challenges that we need to confront and overcome. We can chart a path to a future where AI is an unequivocal tool for good through the diligent application of robust ethical guidelines, sound engineering practices, and practical policy regulation.

Ongoing Discussion and Research on AI:

This topic requires ongoing discussion and research. As AI advances and becomes even more integral to our daily lives, we must continually examine its uses, question its implications, and strive to maximize its benefits while minimizing its risks.

Balancing Act: The Potential and Risks of AI:

In summary, AI is not inherently dangerous, but it has the potential to be if not properly managed. Thus, the key is approaching AI with an informed and balanced perspective, acknowledging its vast potential to improve our world and the vigilance required to prevent misuse. We shouldn’t fear AI but use it as a powerful, ethical tool for the betterment of society.

The Future of AI: In Our Hands:

As we stand on the precipice of an AI-driven future, let’s focus our efforts not on fear but on understanding, responsible innovation, and thoughtful regulation. Ultimately, we hold the future of AI in our hands.